February 15, 2010 - By Steven Stallion, OCI Senior Software Engineer

Middleware News Brief (MNB) features news and technical information about Open Source middleware technologies.

INTRODUCTION

Perhaps one of the most critical decisions application designers face when creating a distributed system is how data should be exchanged between interested parties. Typically, this involves selecting one or more communication protocols and determining the most efficient means of dispatching data to each endpoint. Implementing lower-level communications software can be time consuming, expensive, and prone to error. Too often, after investing a significant amount of time and effort, many designers find that last minute changes in requirements or flaws in the initial design lay waste to this crucial layer of software.

Middleware solutions are designed to aid (if not solve) these types of problems. Rather than have each application implement a custom communications suite, designers may instead rely upon the features present in a given Middleware product to act as a de-facto compatibility layer. The Data Distribution Service (DDS) specification is one such solution proposed by the Object Management Group. As described in a prior article, OpenDDS is an open source implementation of the DDS specification that provides a number of pluggable transport options to meet a wide variety of communication needs. Combined with expressive Quality of Service (QoS) facilities, OpenDDS is an ideal solution for those projects which demand a configurable and efficient means of distributing data with little to no risk for the designer.

Starting with version 2.1, OpenDDS provides a new IP multicast transport with improved reliability and better determinism on lossy networks. This article provides a detailed explanation of the multicast transport implementation, its interactions over an IP-based network, and a comparative analysis of other IP-based transports supported by OpenDDS.

IMPLEMENTATION

Starting with version 2.1, support for IP multicast is provided by the multicast transport. The multicast transport was designed as a replacement for the older ReliableMulticast and SimpleMcast transports. Unlike other OpenDDS transport implementations, multicast provides unified support for best-effort and reliable delivery based on transport configuration.

Best-effort delivery imposes the least amount of overhead as data is exchanged between peers, however it does not provide any guarantee of delivery. Data may be lost due to unresponsive or unreachable peers or received in duplicate.

Reliable delivery provides for guaranteed delivery of data to associated peers with no duplication at the cost of additional processing and bandwidth. Reliable delivery is achieved through two primary mechanisms: 2-way peer handshaking and negative acknowledgment of missing data. Each of these mechanisms is bounded to ensure deterministic behavior and is configurable to ensure the broadest applicability possible for user environments.

Reliable delivery is achieved through two primary mechanisms:

-

2-way peer handshaking (SYN/SYN+ACK)

-

Negative acknowledgment of missing data (NAK/NAK+ACK)

For the purpose of discussing the interactions between reliable peers, it helps to narrow the view of the multicast group to two specific peers: active and passive. The active peer is the publishing side of an association. The passive peer is always the subscribing peer. It is important to remember that control data is exchanged among all peers within the multicast group; however disinterested peers discard payloads which are not addressed to them directly.

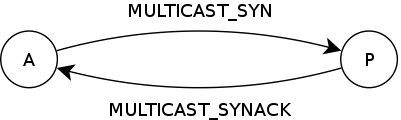

PEER HANDSHAKING (SYN/SYN+ACK)

The first mechanism used to ensure reliability is a 2-way handshake between associated peers. The handshaking mechanism provides a deterministic bound to how long a given peer will wait to form an association. This feature is also used to prevent the publishing peer from sending data too early. This mechanism is detailed below:

The active (i.e. publisher) peer initiates a handshake by sending a MULTICAST_SYN control sample to the passive (i.e. subscriber) peer at periodic intervals bounded by a timeout to ensure delivery. An exponential backoff is observed based on the number of retries.

Upon receiving the MULTICAST_SYN control sample, the passive peer records the current transport sequence value for the active peer and responds with a MULTICAST_SYNACK control sample. This sequence number establishes a value from which reception gaps may be identified in the future.

If the active peer receives a MULTICAST_SYNACK control sample, it then notifies the application that the association is complete. In the event a MULTICAST_SYNACK control sample is not received, an error is logged.

NEGATIVE ACKNOWLEDGMENT (NAK/NAK+ACK)

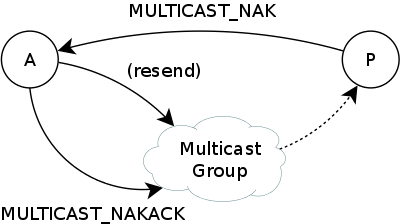

Negative acknowledgment is the primary mechanism for ensuring data reliability. This mechanism places the responsibility of detecting data loss on each subscriber which allows the publisher to dispatch data as quickly and efficiently as possible. This mechanism is detailed below:

As each datagram is delivered to a passive peer, the transport sequence value is scored against a special container which identifies reception gaps. Periodically, a watchdog executes and ages off the oldest unfulfilled repair requests by resetting the sequence low-water mark and logging an error. Once unfulfilled requests have been purged, the watchdog then checks for reception gaps. In the event a new (or existing) reception gap is identified, the peer records the current interval and the high-water mark for the transport sequence. It then issues MULTICAST_NAK control samples to active peers from which it is missing data. Passive peers perform NAK suppression based on all repair requests destined for the same active peer. If previous MULTICAST_NAK requests have been sent by other passive peers, the passive peer temporarily omits previously requested ranges for the current interval. This interval is randomized to prevent potential collisions among similarly associated peers.

If the active peer receives a MULTICAST_NAK control sample it examines its send buffer for the missing datagram. If the datagram is no longer available, it sends a MULTICAST_NAKACK control sample to the entire multicast group to suppress further repair requests. If the datagram is still available, it resends the missing data to the entire multicast group.

If the passive peer receives a MULTICAST_NAKACK control sample, it behaves just as if the repair request has aged off, suppressing further repair requests and logging an error.

CONFIGURATION

The multicast transport supports a number of configuration options:

-

The

default_to_ipv6andport_offsetoptions affect how default multicast group addresses are selected. Ifdefault_to_ipv6is set to1(enabled) then the default IPv6 address is used. Theport_offsetoption determines the default port used; this value is added to the transport ID to determine the actual port number. The default values are0(disabled) and49400respectively. -

The

group_addressoption may be used to manually define a multicast group to join to exchange data. Both IPv4 and IPv6 addresses are supported. As withSimpleTcp, OpenDDS IPv6 support requires that the underlying ACE/TAO components be built with IPv6 support enabled. The default value is224.0.0.128:for IPv4 and[FF01::80]:for IPv6. -

If reliable delivery is desired, the

reliableoption may be specified. The remainder of configuration options affect the reliability mechanisms used by the multicast transport. The default value is1(enabled). -

The

syn_backoff,syn_interval, andsyn_timeoutconfiguration options affect the handshaking mechanism.syn_backoffis the exponential base used when calculating the backoff delay between retries. Thesyn_intervaloption defines the minimum number of milliseconds to wait before retrying a handshake. Thesyn_timeoutdefines the maximum number of millseconds to wait before giving up on the handshake. The default values are2.0,250, and30000(30 seconds) respectively.Given the values of

syn_backoffandsyn_interval, it is possible to calculate the delays between handshake attempts (bounded bysyn_timeout):

- delay = syn_interval * syn_backoff ^ <# retries>

For example, if the default configuration options are assumed, the delays between handshake attempts would be: 0, 250, 1000, 2000, 4000, 8000, and 16000 milliseconds.

-

The

nak_depth,nak_interval, andnak_timeoutconfiguration options affect the negative acknowledgment mechanism.nak_depthdetermines the maximum number of datagrams retained by the transport to service incoming repair requests. Thenak_intervalconfiguration option defines the minimum number of milliseconds to wait between repair requests. This interval is randomized to prevent potential collisions between similarly associated peers. The maximum delay between repair requests is bounded to double the minimum value. Thenak_timeoutconfiguration option defines the maximum amount of time to wait on a repair request before giving up. The default values are32,500, and30000(30 seconds) respectively.

For convenience, an example .ini file is provided in the OpenDDS distribution in the following location: $DDS_ROOT/dds/DCPS/transport/multicast/multicast.ini-dist

Currently, there are a couple of restrictions which must be observed when using the transport:

-

At most, each unique multicast group may be used by only one DDS domain.

-

A given

DomainParticipantobject may have only one multicast transport attached for a particular multicast group. For example, an application may not use the same multicast group in transports for both aPublisherand aSubscribercreated from the sameDomainParticipantobject. If an application wishes to both send and receive samples on the same multicast group in the same process, then the application must create two differentDomainParticipantobjects for the same domain -- one for thePublisher, and another for theSubscriber.

As with other transports, dynamic configuration is enabled by the ACE Service Configurator Framework. A svc.conf file should be created which contains the following:

- dynamic OpenDDS_DCPS_Multicast_Service Service_Object *

- OpenDDS_Multicast:_make_MulticastLoader()

The transport is loaded at runtime by passing the following arguments to the application's command-line:

- -ORBSvcConf svc.conf

Alternately, the transport may be statically loaded by including the dds/DCPS/transport/multicast/Multicast.h header (implies static linking):

- #ifdef ACE_AS_STATIC_LIBS

- # include <dds/DCPS/transport/multicast/Multicast.h>

- #endif

Finally, The multicast transport is configured and attached similarly to other transport implementations:

- OpenDDS::DCPS::TransportImpl_rch transport_impl =

- TheTransportFactory->create_transport_impl(

- OpenDDS::DCPS::DEFAULT_MULTICAST_ID,

- OpenDDS::DCPS::AUTO_CONFIG);

-

- OpenDDS::DCPS::AttachStatus status = transport_impl->attach(...);

- if (status != OpenDDS::DCPS::ATTACH_OK) {

- exit(EXIT_FAILURE);

- }

INTERACTIONS

When discussing a wire protocol, it is often helpful to provide a graphical representation of protocol interactions. As described above, there are four transport control sample types used by the protcol state machine:

-

MULTICAST_SYN -

MULTICAST_SYNACK -

MULTICAST_NAK -

MULTICAST_NAKACK

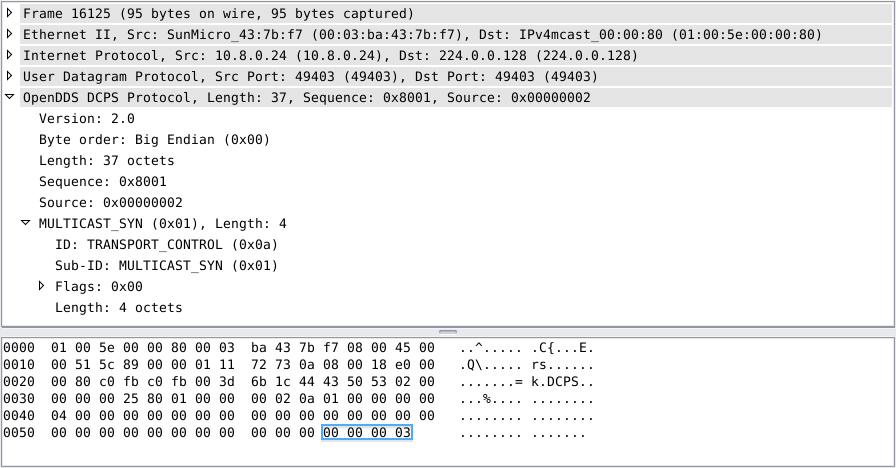

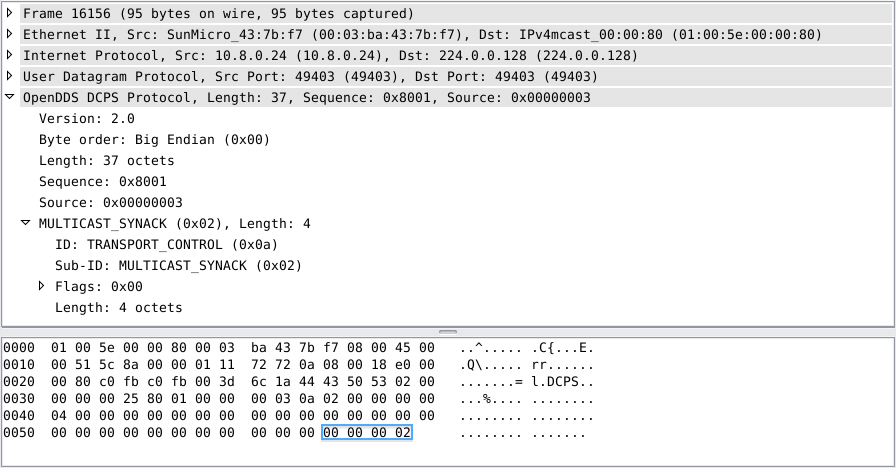

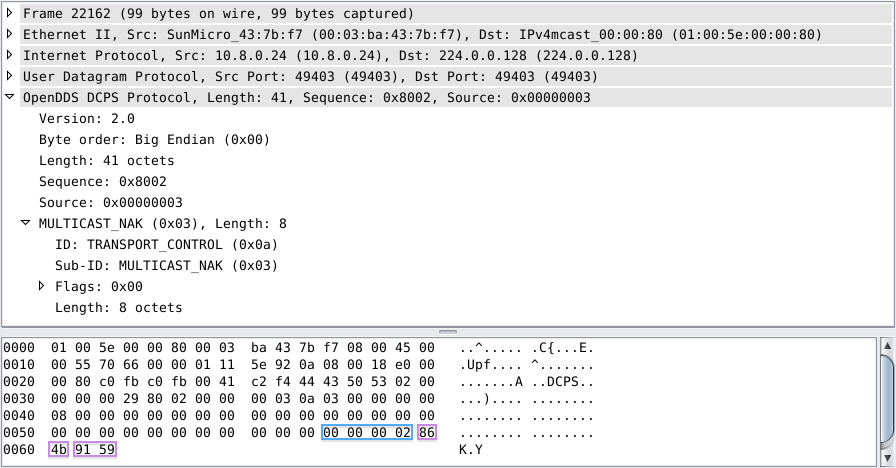

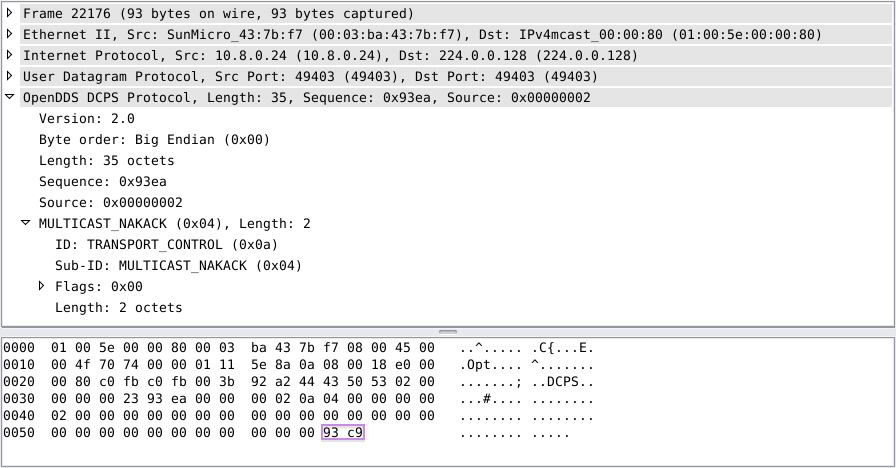

Examples of these PDUs are provided below. These packets were captured from a SunOS (SPARC) workstation using the odds_dissector Wireshark plugin, available in OpenDDS version 2.1.1:

MULTICAST_SYN control samples are sent from active (i.e. publisher) peers. These samples declare an intent to form an association with a specific passive peer. The passive peer is identified by the last four octets (highlighted in blue). This value corresponds to the passive peer's Source ID.

MULTICAST_SYNACK control samples are sent from passive (i.e. subscriber) peers. These samples are sent to acknowledge a handshake attempt by an active peer. When received, the active peer identified by the last four octets (highlighted in blue) completes the association with the passive peer. This value corresponds to the active peer's Source ID.

MULTICAST_NAK control samples are sent from passive peers. These samples are sent when a reception gap has been identified. The octets highlighted in blue indicate the Source ID of the active peer from which it is missing data. The following four octets highlighted in purple indicate the range of missing data. The first two octets indicate the lower bound of the missing range, while the last two octets indicate the upper bound. These values correspond to the sequence numbers of the datagrams.

MULTICAST_NAKACK control samples are sent from active peers. These samples are sent when a repair request is unable to be fulfilled. The last two octets (highlighted in purple) indicate the sequence number of the first datagram which can be recovered.

COMPARISON

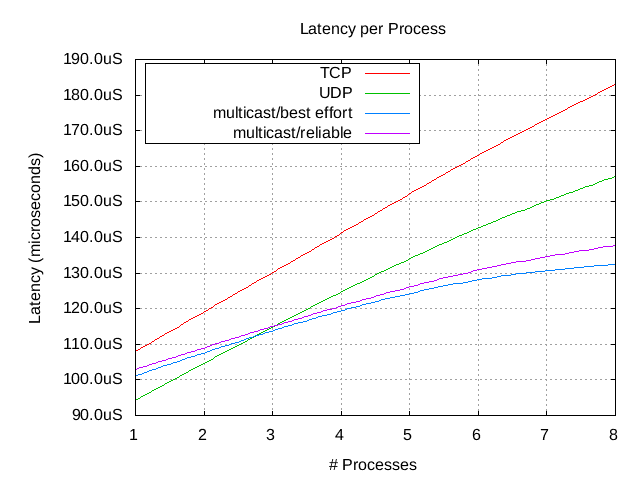

An informal test was constructed to showcase the scalability aspect of IP multicast communication. The scaling test (available as a part of the OpenDDS-Bench benchmarking framework, $DDS_ROOT/performance-tests/Bench) collects latency metrics for dissemination of samples to one or more endpoints. The multicast transport was tested with both reliable and best-effort delivery modes against the udp (UDP/IP) and SimpleTcp (TCP/IP) transports. The following graph represents results collected when running the scaling test between eight similarly configured hosts over a switched ethernet network:

It is important to note that the absolute numbers collected across these test runs should not be used as a basis of comparison. Instead, the relative differences between each data point should be used.

Compared against the unicast IP transports, udp is the only transport which performs better than the multicast transport with respect to latency (notice that latency increases linearly with the number of subscribing endpoints for unicast). The general overhead of dispatching multicast data factors out once the number of subscribing processes are greater than two. This is an indication that unicast transports should still be considered for fan-outs of two or less on this particular hardware. An important point to note is the relative differences between the reliable and best-effort delivery modes. Reliability does impose a small amount of overhead, however said overhead does not increase linearly with respect to the number of subscribers as it does with unicast-based transports.

The above results are a good indication of when IP multicast communication should be considered viable. Given a scenario that a publisher may potentially have several (i.e. greater than two) subscribing endpoints, IP multicast provides the lowest-latency when dispatching data. Conversely, IP multicast is unlikely to scale well in scenarios where a publisher only maintains one or two subscribers.

SUMMARY

This article has provided a detailed look at the new IP multicast transport available in OpenDDS version 2.1. The multicast transport provides an desirable solution for those designers who wish to dispatch low-latency data reliably to one or more subscribers. While IP multicast communication has a number of constraints to manage for reliability, we have shown that reliable communication is still possible with a minimal amount of overhead - especially as the number of subscribers scales. OpenDDS is professionally developed and commercially supported by OCI, a full-service software engineering, open source product and training company.

REFERENCES

- Introduction to OpenDDS

http://www.opendds.org/Article-Intro.html - Data Distribution Service (DDS) Specification

http://www.omg.org/spec/DDS/1.2/ - OpenDDS

multicastDesign Documentation - OpenDDS Bench User Guide