Micronaut: A Java Framework for the Future, Now

By Zachary Klein, OCI Software Engineer

July 2018

Introduction

In May of this year, a team of developers at OCI released the first milestone of a new open source framework: Micronaut.

Micronaut is an application framework for the JVM with a particular emphasis towards microservices and cloud-native applications.

Understandably, in an industry seemingly inundated with framework options, developers generally want to know up front what a new framework brings to the table and what unique features or capability it provides. The goal of this article is to:

- Introduce some of the rationale behind Micronaut

- Highlight some key advantages to using the framework

- Walk you through a simple application to give an overall feel of the framework's constructs and programming style

TL;DR

Micronaut is a JVM framework for building scalable, performant applications using Java, Groovy or Kotlin.

It provides (among many other things) all of the following:

- An efficient compile-time dependency-injection container

- A reactive HTTP server & client based on Netty

- A suite of cloud-native features to boost developer productivity when building microservice systems.

The framework takes inspiration from Spring and Grails, offering a familiar development workflow, but with minimal startup time and memory usage. As a result, Micronaut can be used in scenarios that would not be feasible with traditional MVC frameworks, including Android applications, serverless functions, IOT deployments, and CLI applications.

Rise of the Monolith

Most JVM web applications being developed today are based on frameworks that promote the MVC (Model/View/Controller) pattern and provide dependency-injection, AOP (Aspect-Oriented Programming) support, and ease of configuration.

Frameworks such as Spring Boot and Grails rely on the Spring IoC (Inversion of Control) container, which uses reflection to analyze application classes at runtime and then wire them together to build the dependency graph for the application. Reflection metadata is also used to generate proxies for features such as transaction management.

These frameworks bring numerous benefits to developers, including enhanced productivity, reduced boilerplate, and more expressive application code.

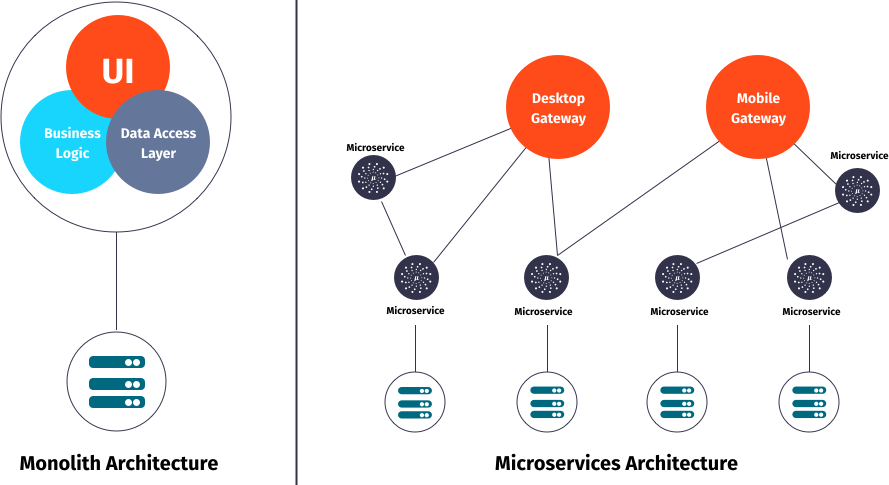

Many of these frameworks were designed around (what is now referred to as) a monolith application – a standalone program that manages the full stack of the application, from the database to the UI. These applications are then packaged as binary files and deployed to a server, typically a servlet container (Tomcat, Glassfish, etc). For a more complete workflow, an embedded container can be included by the framework, making the application more portable.

Cloudy with a Chance of Microservices

Today, these traditional application architectures are being supplanted by new patterns and technologies.

Many organizations are breaking up so-called monolithic applications into smaller, service-oriented applications that work together in a distributed system.

New architectural patterns call for business requirements to be met by the interactions of numerous scope-limited, independent applications: microservices.

Communication across services boundaries – most often via RESTful HTTP calls – is key to this design shift.

However, modern frameworks need to ease not just development, but also operations.

Modern applications are becoming more reliant than ever on cloud computing technologies.

Rather than managing the health of servers and datacenters, organizations are increasingly deploying their applications to platforms where the details of servers are abstracted away, and services can be scaled, redeployed, and monitored using sophisticated tooling and automation.

Upon Further Reflection

Traditional frameworks, of course, have been largely keeping up with the change in the industry, and many developers have successfully built microservices and deployed them to cloud providers using them.

However, the demands of both the new architectures and the cloud environment have revealed some potential pain points when using these tools. Reliance on runtime reflection (for DI and proxy-generation) brings with it several performance issues, both in the time needed to start, analyze, and wire together the application, and in the memory needed to load and cache this metadata.

Unfortunately, these are not fixed metrics in a given application; as a codebase grows in size, so do the resource requirements.

Time and memory are both resources that carry real cost in a cloud platform. Services need to be recycled and brought back online with minimal delay. And the number of services grows (perhaps into the hundreds on large-scale systems). With multiple instances of each service, it quickly becomes apparent that there are real-world costs to be paid for the convenience of these frameworks.

Additionally, many cloud providers are offering serverless platforms, such as AWS Lambda, where applications are reduced to single-purpose functions that can be composed and orchestrated to perform complex business logic.

Serverless computing adds additional incentive for applications to be lightweight and responsive and consume minimal memory – aggravating the issues with traditional, reflection-based frameworks.

A Better Way

Micronaut was designed with microservices and the cloud in mind, while preserving the MVC programming model and other features of traditional frameworks. This is achieved primarily through a brand new DI/AOP container, which performs dependency injection at compile-time rather than runtime.

By annotating classes and class members in your code, you can express the dependencies and AOP behavior of your application using very similar conventions to Spring; however, the analysis of this metadata is done when the application is compiled. At that point, Micronaut will generate additional classes alongside your own code, creating bean definitions, interceptors, and other artifacts that will enable the DI/AOP behavior when the application is run.

TIP: As a technical aside, this compile-time processing is made possible through the use of Java annotation processors, which Micronaut uses to analyze your classes and create associated bean definition classes. For Groovy support, the framework makes use of AST Transforms to perform the same sort of processing.

Micronaut implements the JSR 330 specification for Java dependency injection, which provides a set of semantic annotations under the javax.inject package (such as @Inject and @Singleton) to express relationships between classes within the DI container.

A simple example of Micronaut’s DI is shown in the listing below.

import javax.inject.*;

interface Engine {

int getCylinders();

String start();

}

@Singleton

public class V8Engine implements Engine {

int cylinders = 8;

String start() {

return "Starting V8";

}

}

@Singleton

public class Vehicle {

final Engine engine

public Vehicle(Engine engine) {

this.engine = engine;

}

String public start() {

return engine.start();

}

}When the application is run, a new Vehicle instance will be provided with an instance of the Engine interface – in this case, V8Engine.

import io.micronaut.context.*;

Vehicle vehicle = BeanContext.run().getBean(Vehicle);

System.out.println( vehicle.start() );By moving the work of the DI container to the compilation phase, there is no longer a link between the size of the codebase and the time needed to start the application – or the memory required to store reflection metadata.

As a result, Micronaut applications written in Java typically start within a second.

Gradle

> ./gradlew run

Task :run

16:21:32.511 [main] INFO io.micronaut.runtime.Micronaut - Startup completed in 842ms. Server Running: http://localhost:8080Maven

> ./mvnw compile exec:exec

[INFO] Scanning for projects...

[INFO] --- exec-maven-plugin:1.6.0:exec (default-cli) @ my-java-app ---

16:22:49.833 [main] INFO io.micronaut.runtime.Micronaut - Startup completed in 796ms. Server Running: http://localhost:8080Applications written in Groovy and Kotlin may take a second or so due to the overhead of those languages, and use of third-party libraries (such as Hibernate) will also add their own startup and memory requirements. However, the size of the codebase is no longer a significant factor in either startup time or memory usage; the compiled bytecode already includes everything needed to run and manage the DI-aware classes in the application.

The HTTP Layer

The DI core of Micronaut is an essential part of the framework, but exposing services over HTTP (and consuming other services) is another integral part of a microservice architecture.

Micronaut’s HTTP functionality is built on Netty, an asynchronous networking framework that offers high performance, a reactive event-driven programming model, and support for building server and client applications.

In a microservice system, many of your applications will play both of these roles; a server exposing data over the network, and a client making requests against other services in the system.

Like traditional frameworks, Micronaut includes the notion of a controller for serving requests. A simple Micronaut controller is shown below.

HelloController.java

import io.micronaut.http.annotation.*;

@Controller("/hello")

public class HelloController {

@Get("/{name}")

public String hello(String name) {

return "Hello, " + name;

}

}This is a trivial example, but it demonstrates the familiar programming model used by many Java MVC frameworks. Controllers are simply classes with methods, each with meaningful annotations that Micronaut uses to create the necessary HTTP handling code at compile-time.

Equally important in a microservice environment is interacting with other services as a client.

Micronaut has gone the extra mile to make its HTTP client functionality equivalent to that of a server, meaning that the code to consume a service looks uncannily like that needed to create a service.

Here is a simple Micronaut client that will consume the controller endpoint expressed above.

HelloClient.java

import io.micronaut.http.client.Client;

import io.micronaut.http.annotation.*;

@Client("/hello")

public interface HelloClient {

@Get("/{name}")

public String hello(String name);

}HelloClient can now be used to interact with a service running at the /hello URI. All the code needed to create the client bean in the DI container, perform the HTTP request, bind arguments, and even parse the response is generated at compile-time.

This client can be used within the sample application, a separate service (assuming the URL is set correctly or service-discovery is enabled), or from within a test class, as shown below.

HelloClientSpec.groovy

import io.micronaut.runtime.server.EmbeddedServer

import spock.lang.*

class HelloClientSpec extends Specification {

//start the application

@Shared

@AutoCleanup

EmbeddedServer embeddedServer = ApplicationContext.run(EmbeddedServer)

//get a reference to HelloClient from the DI container

@Shared

HelloClient client = embeddedServer.applicationContext.getBean(HelloClient)

void "test hello response"() {

expect:

client.hello("Bob") == "Hello, Bob"

}

}Because both the client and server methods share the same signature, it is easy to enforce the protocol between both ends of the request by implementing a shared interface, which might be stored in a shared library used across a microservice system.

In our example, both HelloController and HelloClient might implement/extend a shared HelloOperations interface.

HelloOperations.java

import io.micronaut.http.annotation.*;

public interface HelloOperations {

@Get("/{name}")

public String hello(String name);

}HelloClient.java

@Client("/hello")

public interface HelloClient extends HelloOperations {/*..*/ }HelloController.java

@Controller("/hello")

public class HelloController implements HelloOperations {/*..*/ }Reactive by Nature

Reactive-programming is a first-class citizen in both Netty and Micronaut.

The controller and client above can easily be rewritten using any Reactive Streams implementation, such as RxJava 2.0. This allows you to write all of your HTTP logic in an entirely non-blocking manner, using reactive constructs such as Observable, Subscriber, and Single.

RxHelloController.java

import io.reactivex.*;

@Controller("/hello")

public class RxHelloController {

@Get("/{name}")

public Single<String> hello(String name) {

return Single.just("Hello, " + name);

}

}RxHelloClient.java

import io.reactivex.*;

@Client("/hello")

public interface RxHelloClient {

@Get("/{name}")

public Single<String> hello(String name);

}Natively Cloud-Native

Cloud-native applications are designed specifically to operate in a cloud-computing environment, interacting with other services in the system and gracefully degrading when other services become unavailable or unresponsive.

Micronaut includes a suite of features that makes building these sorts of applications quite delightful.

Rather than relying on third-party tooling or services, Micronaut provides native solutions for many of the most common requirements.

Let’s look at just a few of them.

1. Service Discovery

Service discovery means that applications are able to find each other (and make themselves findable) on a central registry, getting rid of the need to look up URLs or hardcode server addresses in configuration.

Micronaut builds service-discovery support directly into the @Client annotation, meaning that performing service discovery is as simple as supplying the correct configuration and then using the "service ID" of the desired service.

For example, the following configuration will register the Micronaut application with a Consul instance, using a service ID of hello-world.

src/main/resources/application.yml

micronaut:

application:

name: hello-world

consul:

client:

registration:

enabled: true

defaultZone: "${CONSUL_HOST:localhost}:${CONSUL_PORT:8500}"Once the application is started up and registered with Consul, clients can look up the service by simply specifying the service ID in the @Client annotation.

@Client(id = "hello-world")

public interface HelloClient{

//...

}Currently available service-discovery providers include Consul and Kubernetes, with support for additional providers planned.

2. Load Balancing

When multiple instances of the same service are registered, Micronaut provides a form of "round-robin" load-balancing, cycling requests through the available instances to ensure that no one instance is overwhelmed or underutilized.

This is a form of client-side load-balancing, where each instance either accepts a request or passes it along to the next instance of the service, spreading the load across available instances automatically.

This load-balancing happens essentially for "free." However, it is possible to provide an alternate implementation. For example, Netflix’s Ribbon library can be installed and configured to support alternate load-balancing strategies.

src/main/resources/application.yml

ribbon:

VipAddress: test

ServerListRefreshInterval: 20003. Retryable and Circuit Breakers

When interacting with other services in a distributed system, it’s inevitable that at some point, things won’t work out as planned; perhaps a service goes down temporarily or simply drops a request. Micronaut offers a number of tools to gracefully handle these mishaps.

For example, any method in Micronaut can be annotated with @Retryable to apply a customizable retry policy to the method. When the annotation is applied to a @Client interface, the retry policy is applied to each request method in the client.

@Retryable

@Client("/hello")

public interface HelloClient { /*...*/ }By default, @Retryable will attempt to call the method three times, with a one-second delay between each attempt.

Of course these values can be overridden, for example:

@Retryable( attempts = "5", delay = "2s" )

@Client("/hello")

public interface HelloClient { /*...*/ }And if hard-coding values leaves a bad taste in your mouth, you can inject the values from configuration, optionally providing defaults if no configuration is provided.

@Retryable( attempts = "${book.retry.attempts:3}",

delay = "${book.retry.delay:1s}" )

@Client("/hello")

public interface HelloClient { /*...*/ }A more sophisticated form of @Retryable is the @CircuitBreaker annotation. It behaves slightly differently in that it will allow a specified number of attempts to fail before "opening" the circuit for a given reset period (30 seconds by default), causing the method to fail immediately without executing the code.

This can help prevent struggling services or other downstream resources from being overwhelmed by requests, giving them a chance to recover.

@CircuitBreaker( attempts = "3", reset = "20s")

@Client("/hello")

public interface HelloClient { /*...*/ }Building a Micronaut App

The best way to really learn a framework is to begin playing with it yourself, so we will conclude our overview of Micronaut with a step-by-step guide to building your first application.

As a bonus, we will also go one step further and actually deploy our "microservice" as a container to a cloud provider – in this case, Google Compute Engine.

Step 1: Installing Micronaut

Micronaut can be built from the source on Github or downloaded as a binary and installed on your shell path. However, the recommended way to install Micronaut is via sdkman.

If you do not have sdkman installed already, you can do so in any Unix-based shell with the following commands:

> curl -s "https://get.sdkman.io" | bash

> source "$HOME/.sdkman/bin/sdkman-init.sh"

> sdk version

SDKMAN 5.6.4+305You can now install Micronaut itself with the following sdkman command.

(Use sdk list micronaut to view available versions. Currently the latest is 1.0.0.M2.)

> sdk install micronaut 1.0.0.M2Confirm that you have installed Micronaut by running mn -v.

mn -v

| Micronaut Version: 1.0.0.M2

| JVM Version: 1.8.0_171Step 2: Create the Project

The mn command serves as Micronaut’s CLI. You can use this command to create your new Micronaut project.

For this exercise, we will create a stock Java application, but you can also choose Groovy or Kotlin as your preferred language by supplying the -lang flag (-lang groovy or -lang kotlin).

The mn command accepts a features flag, where you can specify features that add support for various libraries and configurations in your project. You can view available features by running mn profile-info services.

We’re going to use the spock feature to add support for the Spock testing framework to our Java project. Run the following command:

mn create-app example.greetings -features spock

| Application created at /Users/dev/greetingsNote that we can supply a default package prefix (example) to the project name (greetings).

If we did not do so, the project name would be used as a default package. This package will contain the Application class and any classes generated using the CLI commands (as we will do shortly).

By default the create-app command will generate a Gradle build. If you prefer Maven as your build tool, you can do so using the -build flag

At this point, you can run the application using the Gradle run task.

./gradlew run

Starting a Gradle Daemon (subsequent builds will be faster)

> Task :run

03:00:04.807 [main] INFO io.micronaut.runtime.Micronaut - Startup completed in 1109ms. Server Running: http://localhost:37619Notice that the server port is randomly selected each time the application is run. This makes sense when using a service discovery solution to locate instances, but for our exercise it would be convenient to set the port number to a known value, like 8080. We’ll do that in the following step.

TIP: If you would like to run your Micronaut project using an IDE, be sure that your IDE supports Java annotation processors and that this support is enabled for your project. In IntelliJ IDEA, the relevant setting can be found under Preferences → Build, Execution, Deployment → Compiler → Annotation Processors → Enabled.

Step 3: Configuration

The default configuration format in Micronaut is YAML, although other formats, including Java properties files, Groovy config, and JSON, are supported.

The default configuration file is located at src/main/resources/application.yml. Let’s edit that file to set our server port number.

src/main/resources/application.yml

micronaut:

application:

name: greetings

server:

port: 8080If you restart the application, you’ll see it run on http://localhost:8080.

Step 4: Writing the Code

Within your project directory, run the mn command by itself to start the Micronaut CLI in interactive mode.

> mn

| Starting interactive mode...

| Enter a command name to run. Use TAB for completion:

mn>Run the following two commands to generate a controller, a client, and a service bean.

mn> create-controller GreetingController

| Rendered template Controller.java to destination src/main/java/greetings/GreetingController.java

| Rendered template ControllerSpec.groovy to destination src/test/groovy/greetings/GreetingControllerSpec.groovy

mn> create-client GreetingClient

| Rendered template Client.java to destination src/main/java/greetings/GreetingClient.java

mn> create-bean GreetingService

| Rendered template Bean.java to destination src/main/java/demo/GreetingService.javaEdit the generated files as shown in the next three listings.

src/main/java/example/GreetingController.java

package example;

import io.micronaut.http.annotation.Controller;

import io.micronaut.http.annotation.Get;

import io.reactivex.Single;

import javax.inject.Inject;

@Controller("/greeting")

public class GreetingController {

@Inject

GreetingService greetingService;

@Get("/{name}")

public Single<String> greeting(String name) {

return greetingService.message(name);

}

}.src/main/java/example/GreetingClient.java

package example;

import io.micronaut.http.client.Client;

import io.micronaut.http.annotation.Get;

import io.reactivex.Single;

@Client("greeting")

public interface GreetingClient {

@Get("/{name}")

Single<String> greeting(String name);

}.src/main/java/example/GreetingService.java

package example;

import io.reactivex.Single;

import javax.inject.Singleton;

@Singleton

public class GreetingService {

public Single<String> message(String name) {

return Single.just("Hello, " + name);

}

}With our controller, client, and service classes written, if we run the application again, we should be able to make a request, such as the CURL command shown below.

> curl http://localhost:8080/greeting/Beth

Hello, BethLet's edit the generated GreetingControllerSpec to make use of our client interface.

.src/test/groovy/example/GreetingControllerSpec.groovy

package example

import io.micronaut.context.ApplicationContext

import io.micronaut.runtime.server.EmbeddedServer

import spock.lang.AutoCleanup

import spock.lang.Shared

import spock.lang.Specification

class GreetingControllerSpec extends Specification {

@Shared @AutoCleanup EmbeddedServer embeddedServer = ApplicationContext.run(EmbeddedServer)

void "test greeting"() {

given:

GreetingClient client = embeddedServer.applicationContext.getBean(GreetingClient)

expect:

client.greeting("Beth").blockingGet() == "Hello, Beth"

}

}Run ./gradlew test to execute the test (or execute them within your IDE, if you have annotation processing enabled).

./gradlew test

BUILD SUCCESSFUL in 6sStep 5: Into the Cloud

In order to deploy our application we’ll need to generate a runnable build artifact. Run the shadowJar Gradle task to create an executable "fat" JAR file.

> ./gradlew shadowJar

BUILD SUCCESSFUL in 6s

3 actionable tasks: 3 executedTest that your JAR file runs as expected using the java -jar command.

java -jar build/libs/greetings-0.1-all.jar

03:44:50.120 [main] INFO io.micronaut.runtime.Micronaut - Startup completed in 847ms. Server Running: http://localhost:8080The next few steps have been taken from documentation on Google Cloud’s website. In order to follow these steps you will need a Google Cloud account with billing enabled.

Google Cloud Setup

-

Create a project from the Google Cloud Console.

-

Ensure that Compute Engine and Cloud Storage APIs are enabled in your API Library.

-

Install the Google Cloud SDK. Run

gcloud initto initialize the SDK and choose your new project created in Step 1.

Upload JAR

-

Create a new Google Storage bucket to store the JAR file. Keep a note of the bucket name:

greetingsin this example.

> gsutil mb gs://greetings- Upload the

greetings-all.jarfile to the new bucket.

gsutil cp build/libs/greetings-0.1-all.jar gs://greetings/greetings.jarCreate Instance Startup Script

Google Compute allows you to provision a new instance using a Bash script. Create a new file within the project directory named instance-startup.sh. Add the following content:

#!/bin/sh

# Set up instance metadata

PROJECTID=$(curl -s "http://metadata.google.internal/computeMetadata/v1/project/project-id" -H "Metadata-Flavor: Google")

BUCKET=$(curl -s "http://metadata.google.internal/computeMetadata/v1/instance/attributes/BUCKET" -H "Metadata-Flavor: Google")

echo "Project ID: ${PROJECTID} Bucket: ${BUCKET}"

# Copy the JAR file from the bucket

gsutil cp gs://${BUCKET}/greetings.jar .

# Update and install/configure dependencies

apt-get update

apt-get -y --force-yes install openjdk-8-jdk

update-alternatives --set java /usr/lib/jvm/java-8-openjdk-amd64/jre/bin/java

# Start the application

java -jar greetings.jarConfigure Compute Engine

-

Run the following command to create the Compute instance, using the

instance-startup.shscript and the bucket name you used in the previous steps.

gcloud compute instances create greetings-instance \

--image-family debian-9 --image-project debian-cloud \

--machine-type g1-small --scopes "userinfo-email,cloud-platform" \

--metadata-from-file startup-script=instance-startup.sh \

--metadata BUCKET=greetings --zone us-east1-b --tags http-server- The instance will be initialized and begin to start up immediately. This may take a few minutes.

- Run the following command periodically to view the instance logs during the startup process. If all goes well, you should see a "Finished running startup scripts" message once this process completes.

> gcloud compute instances get-serial-port-output greetings-instance --zone us-east1-b- Run the following command to open up HTTP traffic to port 8080.

gcloud compute firewall-rules create default-allow-http-8080 \

--allow tcp:8080 --source-ranges 0.0.0.0/0 \

--target-tags http-server --description "Allow port 8080 access to http-server"- Get the external IP of your Compute instance with the following command:

gcloud compute instances list

NAME ZONE MACHINE_TYPE PREEMPTIBLE INTERNAL_IP EXTERNAL_IP STATUS

greetings-instance us-east1-b g1-small 10.142.0.3 35.231.160.118 RUNNINGYou should now be able to access your application using the EXTERNAL_IP.

> curl 35.231.160.118:8080/greeting/World

Hello, WorldOnwards and Upwards

At the time of writing, Micronaut is still in early stages of development, and there is still plenty of work to be done. However an incredible amount of functionality is already available in the current milestone releases.

In addition to the features discussed in this article, there is support for the following:

- Security (using either JWT, sessions, or basic auth)

- Management endpoints

- Auto-configurations for data access using Hibernate, JPA, and GORM

- Support for batch jobs using

@Scheduled - Configuration sharing

- And much more

The definitive reference for developing with Micronaut is the user guide at http://docs.micronaut.io.

A small but growing selection of step-by-step tutorials are available at http://guides.micronaut.io as well, including guides for all three of Micronaut’s supported languages: Java, Groovy, and Kotlin.

The Micronaut community channel on Gitter is an excellent place to meet other developers who are already building applications with the framework, as well as interacting with the core development team.

Time will tell what impact Micronaut will have on microservice development and the industry as a whole, but it seems clear that the framework has already contributed a major advance to how applications will be built in the future.

Cloud-native development is certainly here to stay, and Micronaut is the latest example of a tool that was built with this landscape in mind. Like the architecture that motivated its creation, Micronaut’s flexibility and modularity will allow developers to create systems that even its designers could not have foreseen.

The future of development on the JVM is bright, if a bit cloudy, and Micronaut is sure to play an important part. Start the countdown!

Resources

- Micronaut website: http://micronaut.io

- Micronaut Documentation: http://docs.micronaut.io

- Micronaut Guides: http://guides.micronaut.io

- Gitter Community: https://gitter.im/micronautfw

Software Engineering Tech Trends (SETT) is a regular publication featuring emerging trends in software engineering.